Introduction

This is part 1 of a 5 part series taking a closer look at spatial computing and how it is driving us towards web 3.0 and the metaverse.

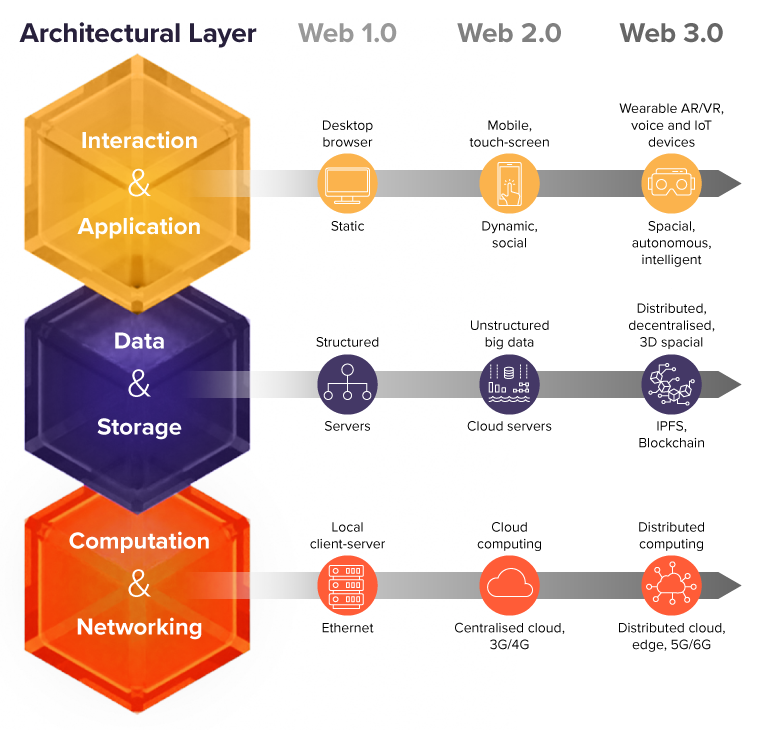

We will work our way down the web 3.0 technology stack below exploring some of the key technologies and concepts that are bringing us closer to the vision of the metaverse, discussing the opportunities and challenges at hand along the way.

(Source: Hadean, adapted from Deloitte, “The Spatial Web and Web 3.0”)

Starting at the interaction and application layer, we will work our way down towards the underlying infrastructure:

- Part 1: Why is Spatial Computing a Key Component?

- Part 2: The Industrial Metaverse

- Part 3: Decentralised Architectures and the Open Metaverse

- Part 4: Computing for Web 3.0 and the Metaverse

- Part 5: Networking for Web 3.0 and the Metaverse

So without further ado, let’s get cracking with part 1!

First things first, let’s clear the air with definitions

I like to define the Metaverse as a term that indicates the evolution of how we interact with the internet – driven by the convergence of a multitude of technologies (in particular 3D and spatial computing) and societal trends where people create and engage in shared experiences through virtual and augmented environments.

It is part of the web 3.0 technology stack illustrated above that signals the broader evolution of the internet (beyond just the interaction and application layer), driven by distributed and decentralised technology architectures to enable open and trustless peer-to-peer systems. Web 3.0 / Web3 is the third iteration of the internet that aims to put more control back to its users by disintermediating third party data brokers.

Why is the evolution of how we interact with computers a big deal?

Computers enable us to access the internet, and with each cycle of how we interact with computers, society has reaped benefits exponentially – making them easier to use and more accessible.

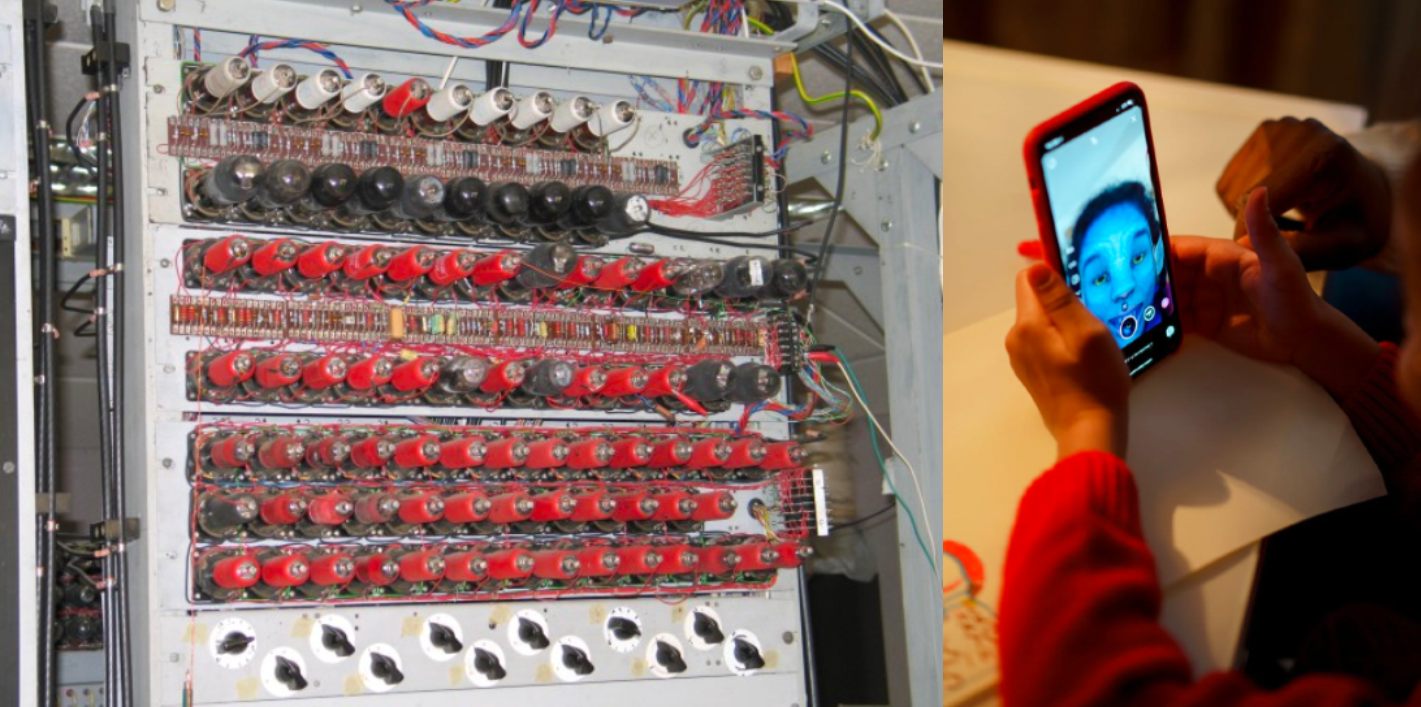

For context, we moved from the days of computers being operated by wires, to punch cards, to command line interfaces and then pivotally to graphical user interfaces (GUIs) that underpin how we interact with computers and the internet today. Accessibility was further propelled by smart phones that brought billions of people online, and added additional dimensions of interactivity through touch screens. Nowadays we see people use their phones and wearables to interact seamlessly with the world around us – through voice commands, QR codes, contactless payments, and various AR applications.

Computers began digitising numbers and text, then various types of media, and are now gaining digitised spatial awareness. Notice that with increased digitisation and new ways of interacting with computers, the old formats don’t become instantly redundant. We simply augment and enhance the way we access and interact with computers and the internet.

(Source: Next Reality)

Similarly, web 3.0 and the metaverse will enhance certain experiences and gain greater adoption in some industries more than others.

Nevertheless, the next great computing interface is emerging and spatial computing is at the centre of it. There are many technologies represented by two-letter acronyms that are working towards this (VR, AR, XR…), but they all fundamentally rely on 3D interactive content to bridge physical and virtual worlds.

The inevitability of a spatial interface

Our world is 3D; we are born into, and grow up living in 3D spatial space. Our vision, cognition, sensors and movements are shaped within the context of these dimensions.

They say a picture is worth a thousand words. Indeed, the explosion of data by a multitude of sources is making it necessary to advance towards a more ‘eye-centric’ interface that will enable us to interact in a much more productive and efficient manner.

Spatial interfaces will enable us to view, edit, and share data in a much more interactive and intuitive manner. Imagine the worksheets of the future where we are able to visualise and interact with the data and treat it like a simulation space, running multiple ‘what-if’ scenarios to inform our decision making and much more.

In the wake of an increasingly remote workforce, the future of how we work is gaining tremendous interest as leading technology companies invest in R&D and investors fund startups looking at bridging the physical and virtual collaborative experiences to overcome our favourite workplace term of the last couple of years, ‘zoom fatigue’.

(Source: Verge)

Wait, but I thought the Metaverse was mainly social experiences?

However, it is not just about productivity, of course today’s popularised depictions of the metaverse are built mostly around socialising and entertainment.

They are enabling anyone to enjoy immersive experiences: be it virtual concerts on traditional games such as Fortnite, Minecraft and Roblox, transacting digital assets on Decentraland or playing to earn on Axie Infinity.

(Source: Verge)

Events such as the global pandemic, certainly have catalysed society to explore some of these concepts sooner than we may have. And some of these experiences have been the only way to interact with friends and family during isolation.

As we ease our way out of the pandemic, whatever said and done society will continue to engage in these immersive experiences, and they are only trending upwards.

Game Engines

What do all these virtual worlds have in common? They are all built on game engines that as the name suggests heralds from the gaming industry. They are software suites that enable developers to build and run these video games. However, saying that these tools are just used to develop video games is an injustice, because these platforms are being used to create and simulate much more. These tools will be consequential in building the metaverse:

In fact, the most popular game engines don’t refer to themselves by this phrase. Unreal Engine describes themselves as a ‘Real-Time 3D Creation Tool’ and Unity describe themselves as a ‘Real-Time Development Platform’. Unity also sized their market beyond gaming to be much larger than their core gaming market when they IPO’d in 2020.

The Metaverse is so much more than just gaming and entertainment. In part 2 of this series, we will explore the enterprise themes of the ‘Industrial Metaverse’ in more detail.

———–

If you would like to go deeper on some of the concepts here, please read these blogs that I recommend and inspired this post.

- How to Explain the ‘Metaverse’ to Your Grandparents | by Aaron Frank | Jan, 2022 | Medium

- An Introduction to The Spatial Web | by Gabriel Rene | The Startup | Medium

Want to keep in touch and be notified of the next part of the series? Sign up below: